Introduction to “Attention is All You Need” concept

Have you ever wondered how AI can translate languages, write essays, or even chat with you like how any two persons are interacting in a chat? Behind these impressive capabilities lies a revolutionary AI architecture called the Transformer, introduced in a 2017 paper titled “Attention is All You Need.” Today, I’ll break down this groundbreaking technology in a way that anyone can understand—no computer science degree required!

What is a Transformer?

A Transformer is a type of artificial intelligence model that has revolutionized how computers understand and generate human language. Unlike earlier AI models that processed text word by word (imagine reading a book one word at a time, forgetting earlier context), Transformers can look at entire sentences or paragraphs simultaneously.

Think of a Transformer as a super-reader that can:

- Process an entire text at once

- Understand relationships between words that are far apart

- Generate human-like responses based on what it’s learned

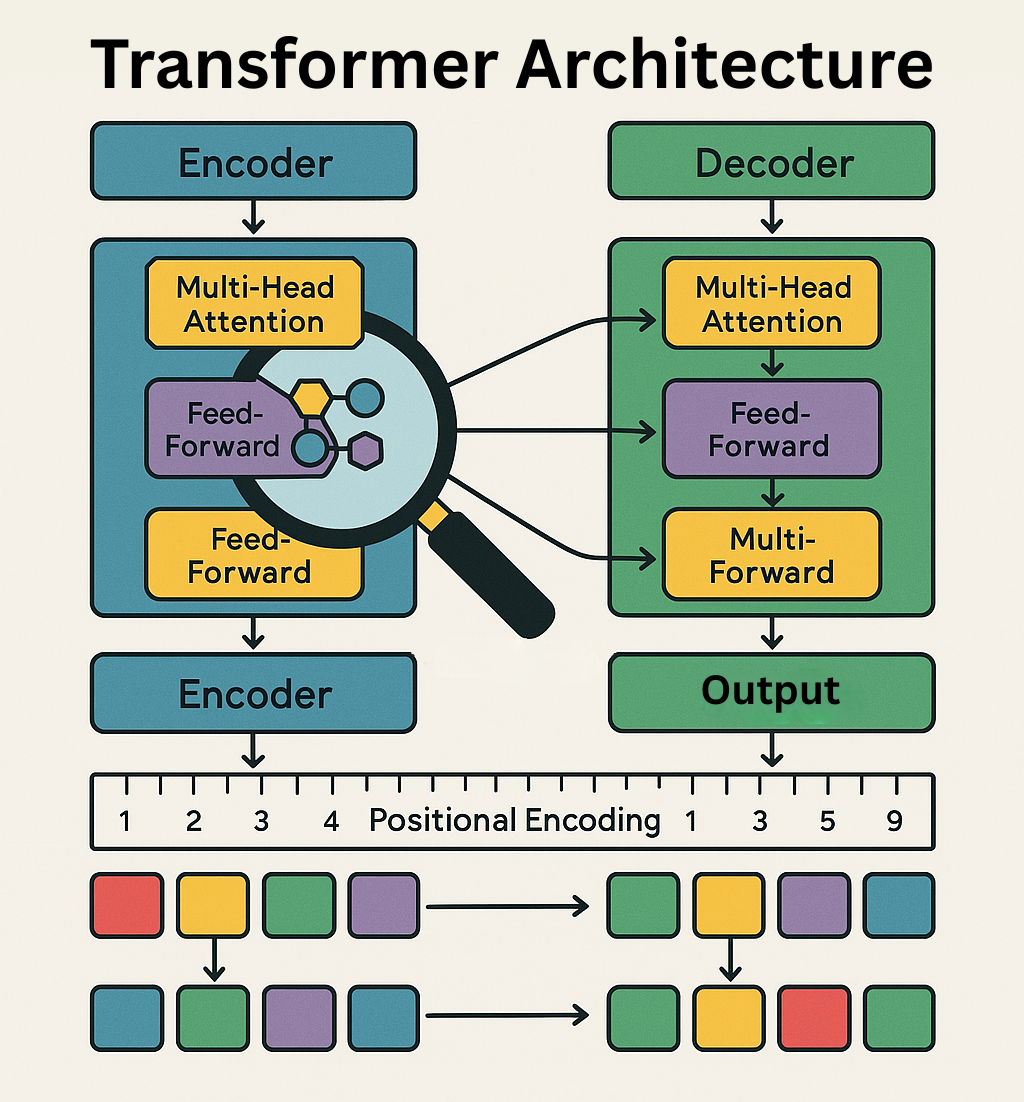

The Architecture: Encoders and Decoders

The Transformer consists of two main components that work together like a well-oiled machine:

The Encoder: This is like the reader in our AI system. It takes your input text (like a question or a sentence in English) and converts it into a mathematical representation that the computer can work with. Imagine translating your words into a special code that captures not just the words themselves, but their meanings and relationships.

The Decoder: This is like the writer. It takes the mathematical representation created by the encoder and generates an output (like an answer to your question or a translation of your sentence into Spanish). The decoder creates text by predicting which word should come next, one word at a time.

The Secret Sauce: The Attention Mechanism

The real innovation in Transformers is something called the “attention mechanism”—and it’s exactly what it sounds like!

How Attention Works

Have you ever tried to listen to someone in a crowded room? Your brain naturally focuses on their voice while filtering out background noise. The attention mechanism works similarly:

- It helps the AI focus on the most relevant parts of the text for the task at hand

- It weighs the importance of each word in relation to all other words

- It allows the model to “pay attention” to connections between words, even if they’re far apart

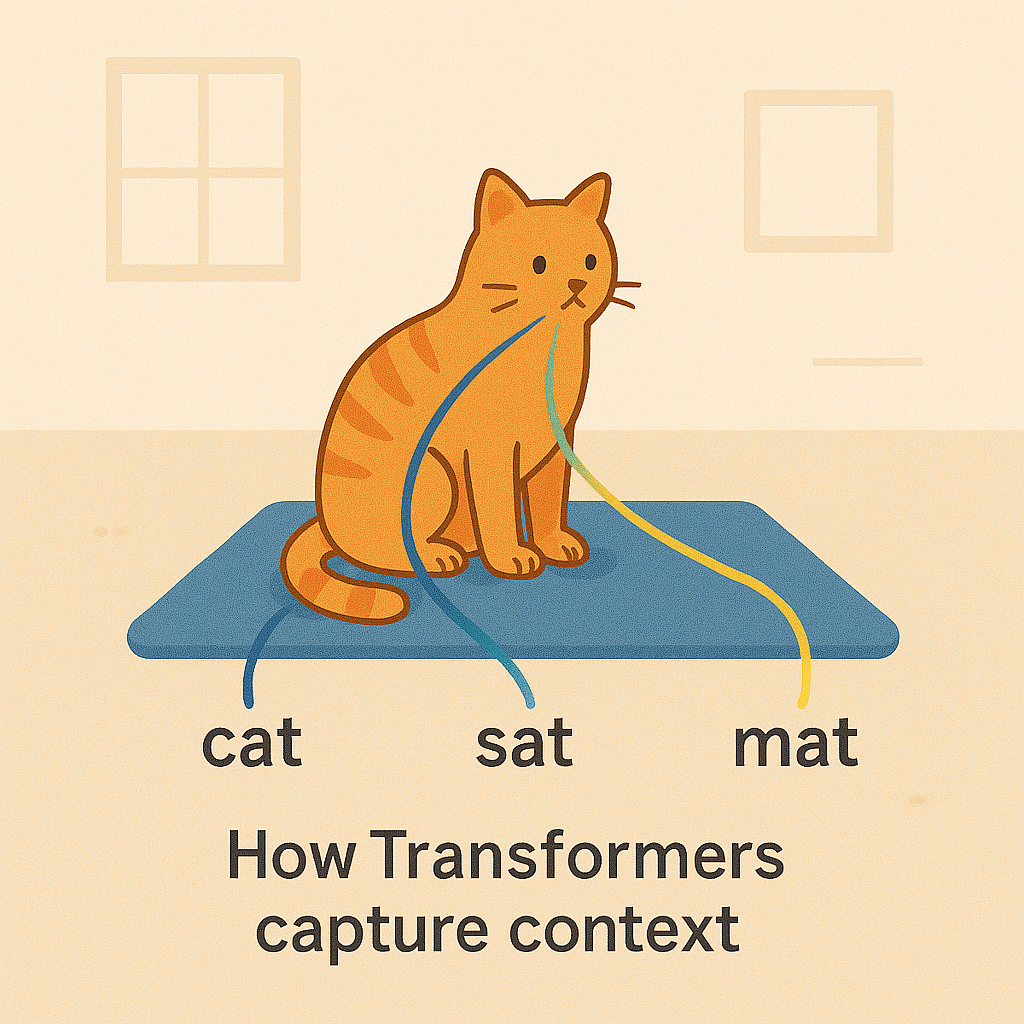

Self-Attention: The Superpower

Self-attention is what makes Transformers truly special. It allows the model to look at every word in relation to every other word in the sentence—all at the same time!

For example, in the sentence “The animal didn’t cross the road because it was too wide,” what does “it” refer to? You know it’s the road, not the animal. With self-attention, the AI can make this connection too, by “attending” to the relationship between “it” and “road.”

Multi-Head Attention: Looking from Multiple Angles

To make things even better, Transformers use something called “multi-head attention.” Imagine being able to look at a painting from different angles to appreciate different aspects of it. Multi-head attention works similarly—it lets the AI look at text from multiple perspectives simultaneously:

- One “head” might focus on grammar

- Another might focus on topic relationships

- Yet another might focus on sentiment or emotion

By combining these different perspectives, the AI gets a much richer understanding of language.

Why Transformers Changed Everything for AI

Before Transformers came along, AI language models had a significant limitation: they processed text sequentially (one word after another). This was like trying to understand a book by reading it through a tiny window that only shows one word at a time.

Transformers changed the game because:

- They’re faster: By processing all words in parallel rather than sequentially

- They’re better with context: They can understand relationships between words anywhere in the text

- They scale well: They can be trained on massive amounts of text data

These advantages allowed companies to build increasingly powerful Large Language Models (LLMs) like GPT, BERT, and others that power many of the AI applications you use today.

A Real-World Example: Translation

Let’s break down how a Transformer might translate an English sentence to Spanish:

- You input: “The cat sat on the mat.”

- The encoder processes the entire sentence at once, creating a mathematical representation that captures the meaning.

- The self-attention mechanism figures out relationships: “sat” relates to “cat,” “on” connects “cat” and “mat,” etc.

- The decoder generates the Spanish translation word by word: “El gato se sentó en la alfombra.”

- At each step, the decoder uses attention to focus on relevant parts of the original sentence.

What makes this powerful is that the model considers the entire sentence’s context when translating each word, rather than translating word-by-word without context.

How Transformer Architecture Affects Your AI Interactions

Now that you understand the basics, let’s explore how this architecture impacts your everyday interactions with AI language models:

1. Context Awareness: Your Prompts Don’t Need to Repeat Yourself

Thanks to the Transformer’s self-attention mechanism:

- AI can connect ideas across long prompts without you needing to re-explain concepts

- Example: If you write “Explain quantum physics like I’m 5. Use analogies involving cats,” the AI will link “quantum physics” to “cats” in the response without explicit reminders

- Impact on you: No more awkwardly repeating keywords – just write naturally

2. Nuance Detection: Subtle Hints Matter

The multi-head attention allows AI to:

- Catch implied meaning in your prompts (“Make this email sound more professional” vs. “Make this email sound casual”)

- Recognize tone cues (“Explain this like a pirate” vs. “Explain this formally”)

- Impact on you: You can “steer” AI responses with simple stylistic hints rather than rigid templates

3. Ambiguity Resolution: AI Will Ask for Clarification (Sometimes)

Self-attention helps AI:

- Spot unclear terms by analyzing their relationship to other words

- Example: If you write “Explain the bank,” AI will recognize ambiguity (financial institution vs. riverbank) and either:

- Guess based on context clues elsewhere in your prompt

- Ask clarifying questions (in systems that allow back-and-forth)

- Impact on you: The clearer your prompt, the less energy AI wastes on “guessing” what you mean

4. Long-Form Understanding: You Can Give Complex Instructions

Traditional models forgot earlier parts of long prompts. Transformers let AI:

- Remember instructions from your first sentence even in lengthy prompts

- Maintain consistent voice/style across long responses

- Impact on you: You can write elaborate prompts with multiple requirements (e.g., “Write a sci-fi story about AI, use 3-act structure, include a plot twist, and make the protagonist a grumpy librarian”)

5. Prompt Optimization: How You Phrase Things Changes AI Focus

The attention mechanism prioritizes words based on their position and relationships:

- Front-loading key terms (“Prioritize brevity: [your request]”) focuses attention on conciseness

- Using separators (— or “”) helps distinguish instructions from examples

- Impact on you: Small formatting tweaks can dramatically improve results

6. Working Around Limitations: Token Windows

While attention is powerful, LLMs still have token limits (typically between 4,000-128,000 words depending on the model). This means:

- Extremely long prompts may get truncated, weakening context

- Pro tip: Put critical information early in your prompt, and avoid redundant details

Practical Takeaways for Better LLM Interactions

| Because of Attention… | You Should… |

| AI analyzes word relationships | Use clear pronouns (“The CEO mentioned earlier” vs. “She”) |

| AI prioritizes recent/strongest signals | Place key instructions first |

| AI mirrors your phrasing/style | “Show, don’t tell” (“Write angrily about traffic” vs. “Write a complaint”) |

| AI handles structured logic better with guidance | Break complex tasks into steps (“Step 1: Identify key themes. Step 2:…”) |

Beyond Language: Transformers Everywhere

While Transformers were originally designed for language tasks, their architecture has proven so effective that it’s now used for:

- Generating images from text descriptions

- Video understanding

- Protein structure prediction in biology

- Music generation

- And many other applications!

Why This Matters to You

Even if you never write a line of code, Transformers affect your daily life. They power:

- The search engines you use

- The translation tools you rely on

- Customer service chatbots

- Content recommendation systems

- AI assistants

The Bottom Line

The “Attention is All You Need” architecture represented a paradigm shift in artificial intelligence. By allowing AI to process information more like humans do—focusing on what’s important and understanding context—Transformers have enabled a new generation of AI systems that can understand and generate human language with remarkable fluency.

The Transformer architecture isn’t just technical trivia – it’s why you can chat with LLMs casually instead of using rigid command-line syntax. The better you understand how LLMs pay attention, the more effectively you can “program” any LLMs through natural language!

Now that you understand how attention mechanisms work, you can interact with AI systems more effectively. The next time you chat with an AI assistant or use a translation tool, remember: behind the scenes, attention truly is all it needs!